Contact Advanced Research Computing

Interactive Jobs

In addition to standard batch job use of compute resources via a slurm job script, researchers have access to to compute resources in an interactive mode as well by utilizing srun and/or salloc commands.

Understanding srun and salloc

srun is a command that simply runs a given command on the HPC cluster. The ‐‐pty flag will attach stdio and stdout to your current terminal, providing an interactive experience.

salloc is a command that allows you to simply allocate resources on the cluster for you to do whatever with. The typical flow for using this is:

- Allocate HPC resources

- Use the HPC resources with srun or ssh

- Deallocate HPC resources

Using salloc can be compared to an sbatch script in the way that the line-by-line execution is handled. The primary difference between salloc and sbatch is that salloc requires interactivity while sbatch executes commands one after another.

Interactive Jobs with srun

An interactive job can be submitted by using the srun command. However, there are specific arguments you must pass in order to have it become interactive.

srun [options] --pty /bin/bash

Example:

[abc123@wind]:~$ srun --pty /bin/bash

srun: job 9977591 queued and waiting for resources

srun: job 9977591 has been allocated resources

[abc123@cn59]:~$ hostname

cn59

This will start a job via Slurm to run bash and connect your terminal session over to the new bash instance. However, using the srun command without any additional provisioning arguments will run your job with the default job resource allocations and time limits.

Here are some common flags you may want to use to request more resources:

- -c [num]: Number of CPU cores to allocate.

- ‐‐mem=[size]: Amount of memory to allocate. Size can be filled as a human-readable format, such as 5GB for 5 Gigabytes.

- ‐‐gres=[list]: List of specific resources to request, separated by a colon ‘:’. View our page on GPU-Accelerated Jobs for valid entries.

- ‐‐x11: Enables X11 forwarding. See our page on X11 forwarding to set it up.

Example:

[abc123@wind]:~$ srun -c 4 --mem=4GB --gres=gpu --pty /bin/bash

Note: If your connection is lost while your job is active, your interactive job will end.

Ending an Interactive Job With srun

Once you are done with an interactive job, all that’s needed to end the job is to either use the exit command or to close your terminal.

[abc123@cn59]:~$ exit

Interactive Jobs with salloc

If you are familiar with certain low-level programming languages such as C, you may be familiar with the typical memory allocation flow when using malloc():

- Allocate the memory

- Use the memory

- Deallocate the memory when done

Allocating resources with Slurm is performed in a very similar manner, but there are multiple ways to use the resources you allocate.

Allocating Resources

The salloc command will allocate resources that you specify for further use. The use of salloc is:

salloc [options] [command [args]]

In the [options] section, you MUST specify the following resources. Otherwise, the commands you execute will run, but will not have any resources to work with.

The majority of the flags used when using srun are available here. Some common ones include:

- -c [num]: Number of CPU cores to allocate.

- ‐‐mem=[size]: Amount of memory to allocate. Size can be filled as a human-readable format, such as 5GB for 5 Gigabytes.

- ‐‐gres=[list]: List of specific resources to request, separated by a colon :. View our page on GPU-Accelerated Jobs for valid entries.

Note: It is not required to input a command into salloc. If a command is not provided, then your default shell program will be ran (typically is bash).

Example:

[abc123@wind]:~$ salloc -c 4 --mem=4GB --gres=gpu

salloc: Granted job allocation <jobid>

salloc: Nodes <nodelist> are ready for job

Once you have allocated the resources, you are ready to use them.

Note: salloc will bring you into a nested bash shell on a login node. If you exit this shell normally, then the resources will immediately be deallocated.

Using Allocated Resources – srun

Once resources are allocated, commands can be ran on the allocated resources with the srun command.

[abc123@wind]:~$ salloc -c1 --mem=4G

salloc: Granted job allocation 9991364

salloc: Nodes cn69 are ready for job

[abc123@wind]:~$ srun hostname

cn69

[abc123@wind]:~$ srun hostname

cn69

[abc123@wind]:~$ srun hostname

cn69

Using Allocated Resources – ssh

Another way to use the allocated resources is to connect directly to the node containing the allocated resources with ssh.

Example:

[abc123@wind]:~$ salloc -c 4 --mem=4G

salloc: Granted job allocation 9991277

salloc: Nodes cn69 are ready for job

[abc123@wind]:~$ ssh cn69

[abc123@cn69]:~$ hostname

cn69

Once you see that the prompt shows a compute node, then you are ready to run your programs.

Deallocating salloc Resources

Once you are done with an interactive job, there are two ways to deallocate your resources.

The first method would be to simply exit the subshell with the exit command.

[abc123@wind]:~$ exit

exit

salloc: Relinquishing job allocation 10027941

[abc123@wind]:~$

However, if this method is unavailable, another method would be to run the scancel command:

[abc123@cn51]:~$ exit

logout

Connection to cn51 closed.

[abc123@wind]:~$ scancel 9991298

salloc: Job allocation 9991298 has been revoked.

Note: If you run the scancel command while inside the salloc subshell, you will remain in the subshell but will no longer have the resources provided by salloc.

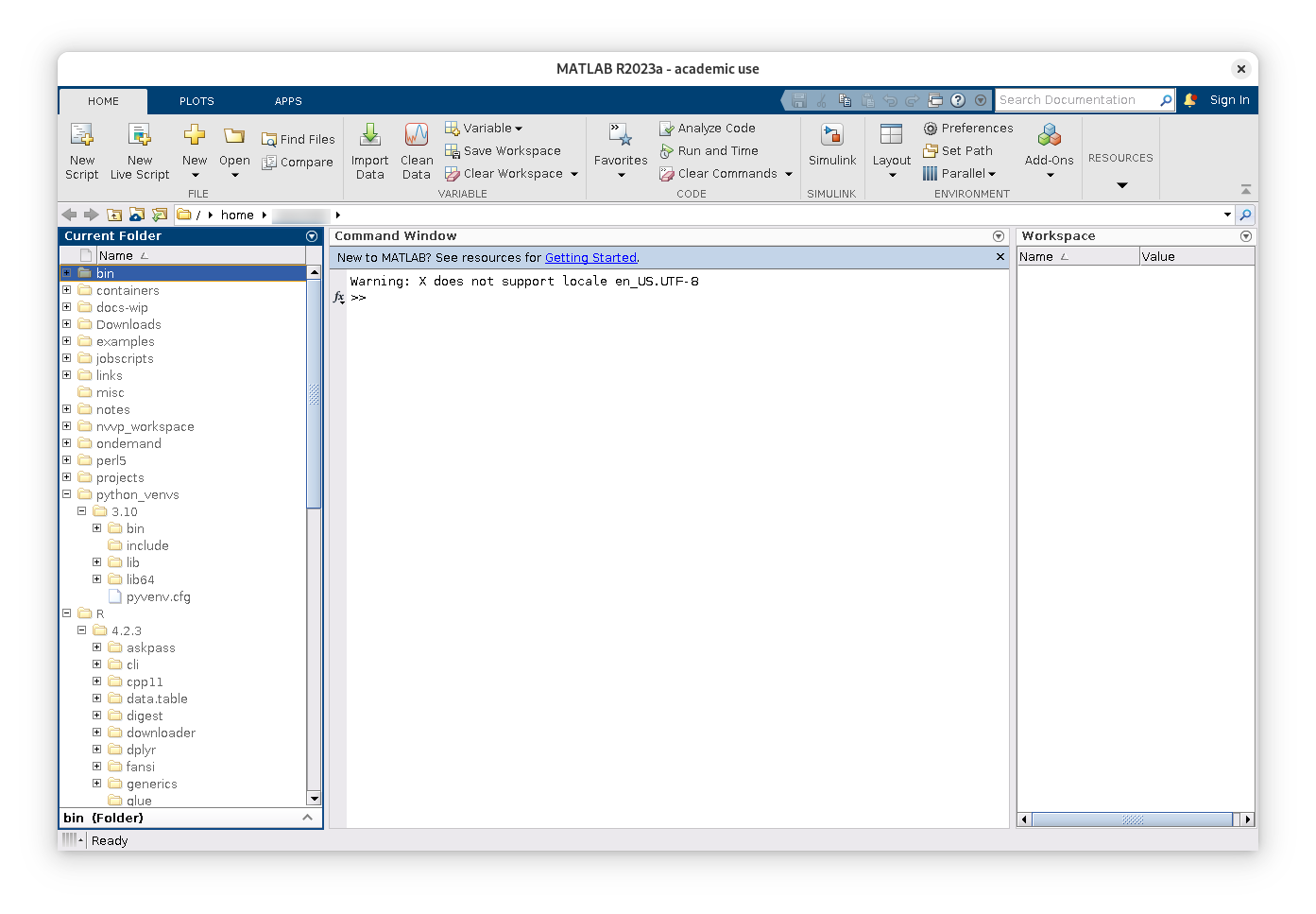

Example: Running Matlab in an Interactive Job

This example shows that you can have GUI applications run in an interactive job. For this to work, you must have X11 forwarding set up.

Using srun

[abc123@wind]:~$ srun -c4 --mem=4GB --x11 --pty bash

[abc123@cn51]:~$ module load matlab

[abc123@cn51]:~$ matlab

MATLAB is selecting SOFTWARE OPENGL rendering.

[abc123@cn51]:~$ exit

exit

[abc123@wind]:~$

Using salloc

[abc123@wind]:~$ salloc -c4 --mem=4GB

salloc: Granted job allocation 9991312

salloc: Nodes cn51 are ready for job

[abc123@cn51]:~$ module load matlab

[abc123@cn51]:~$ matlab

MATLAB is selecting SOFTWARE OPENGL rendering.

[abc123@cn51]:~$ exit

exit

Connection to cn51 closed.

[abc123@wind]:~$ scancel 9991312

salloc: Job allocation 9991312 has been revoked.