Contact Advanced Research Computing

Quick Links

- Request an Account

- Submitting your first job

- Request storage

- Slurm Documentation

- Slurm Cheat Sheet

- Connecting to Monsoon

- File Management

- Linux/Bash shell

- Using the Cluster: Advanced

- FAQs

Using the Monsoon Cluster: Introduction

We use a piece of software called Slurm for resource management and scheduling. Job priorities are determined by a number of factors, fairshare (most predominant) as well as age, partition, and size of the job.

A Slurm cheat sheet is available if you have used Slurm before.

Viewing the Cluster Status

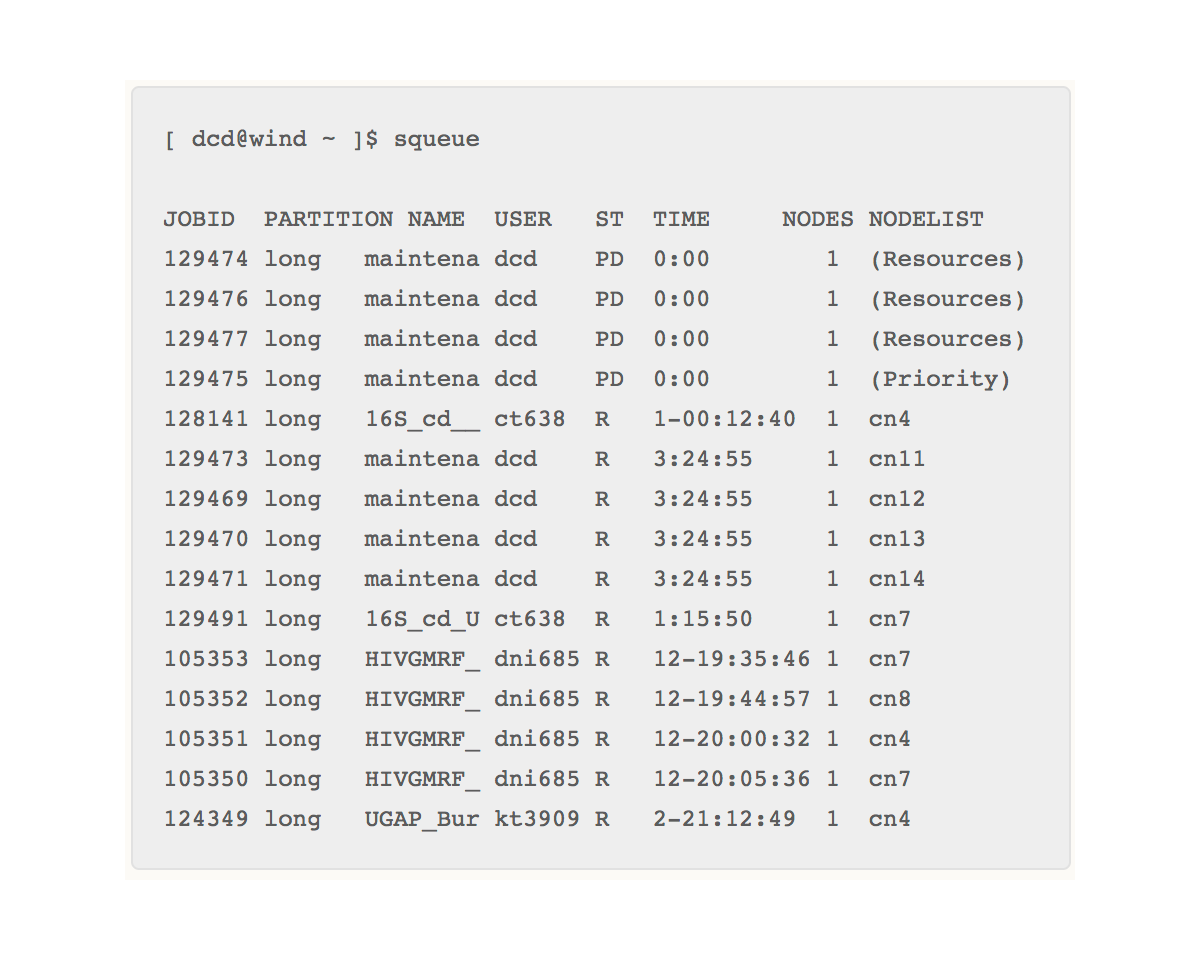

While logged in to one of Monsoon’s login nodes (wind or rain), you can inspect the state of the queues with the “squeue” command:

By default “squeue” lists both the running (R) and the pending queue (PD). The jobs with an “R” in the “ST” column are in the running state. The jobs with a “PD” in the “ST” column are in the pending state.

The “time” column lists how long the job has been running. You can see that there are four jobs that have been running for almost 13 days.

It might appear that the cluster’s resources are mostly all allocated since there are jobs in the pending state, but this is not necessarily the case. It could be that the jobs in the PD state are asking for more resources than are available on the cluster. To find out more info about the cluster state, use the “sinfo” command.

[ abc123@wind ~ ]$ sinfo

PARTITION AVAIL TIMELIMIT NODES STATE NODELIST

core up 14-00:00:0 7 mix cn[4,7-8,11-14]

core up 14-00:00:0 7 all cn[1-3,5-6,9-10,15]This shows the partition of nodes defined in slurm, of which there is only one: “core”. Note that we can see that there are free cores (cpus) available as there are nodes in the “mix” state. Nodes in the mix state only have some of their cores currently allocated, whereas nodes that have all cores allocated will be in the “alloc” state.