Contact Advanced Research Computing

Quick Links

Submitting Your First Job

In order to submit work to the cluster we must first put together a job script which tells Slurm what resources you require for your application. In addition to resources, we need to tell Slurm what command or application to run.

A SLURM job script is a bash shell script with special comments starting with “#SBATCH”. View example job scripts in “/common/contrib/examples/job_scripts”.

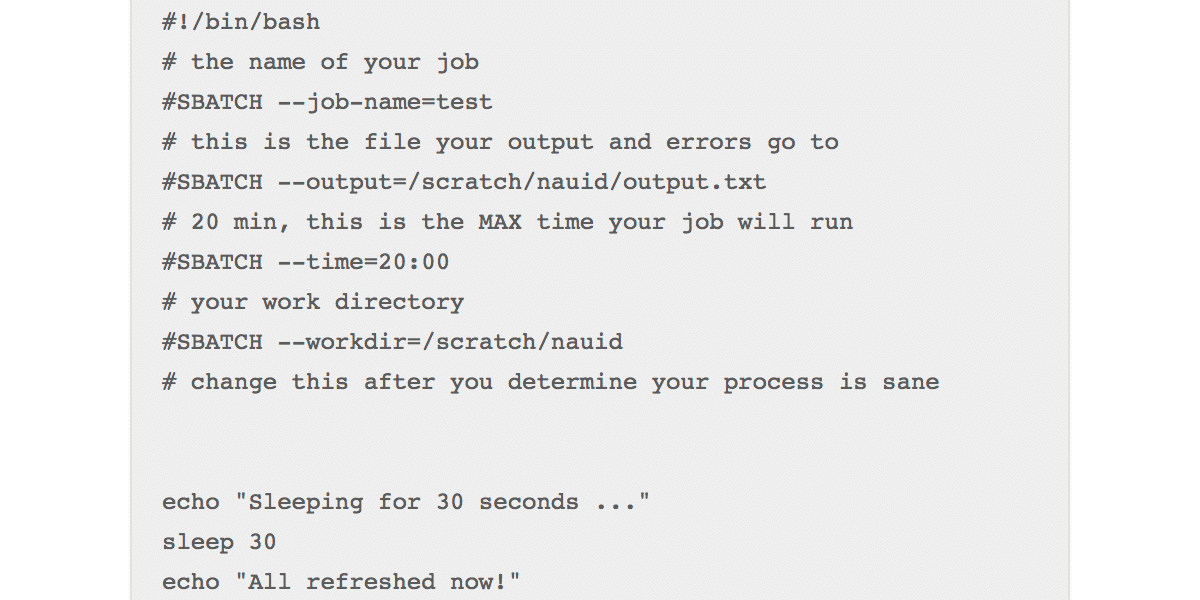

The first line in the job script is, “#!/bin/bash”, this signifies that this file is a bash script As you might already know, any line in a bash script that begins with a “#” is a comment and is therefore disregarded when the script is running.

However, in this context, any line that begins with “#SBATCH” is actually a meta-command to the “Slurm” scheduler that informs it how to prioritize, schedule, and place your job. The “–time” command allows you to give SLURM a maximum amount of time that your job will be allowed to run. This is very important for scheduling your jobs efficiently because the shorter the time you provide, the sooner your job will start.

The last three lines are the ‘payload’ (the work being done). In this case our job is simply printing a message, sleeping for 30 seconds (pretending to do something) and then coming back from sleep and printing a final message.

Now lets submit the job to the cluster:

[dcd@wind ~ ]$ sbatch simplejob.sh

Submitted batch job 138405Slurm responds back by providing you a job number “138405”. You can use this job number to monitor your jobs progress.

Lets look for job 138405 in the queue:

[dcd@wind ~ ]$ squeue

JOBID PARTITION NAME USER ST TIME NODES NODELIST(REASON)

138405 core test dcd R 0:05 1 windWe can see that our job is in the running state residing in the “all” queue with the work being done on the node: wind. Now that the job is running we can inspect its output by viewing the output file that we specified in our job script “/scratch/nauid/output.txt”.

[dcd@wind ~ ]$ cat /scratch/nauid/output.txt

Sleeping for 30 seconds ...

All refreshed nowGreat, the output that normally would have been printed to the screen has been captured in the output file that we specified in our jobscript.